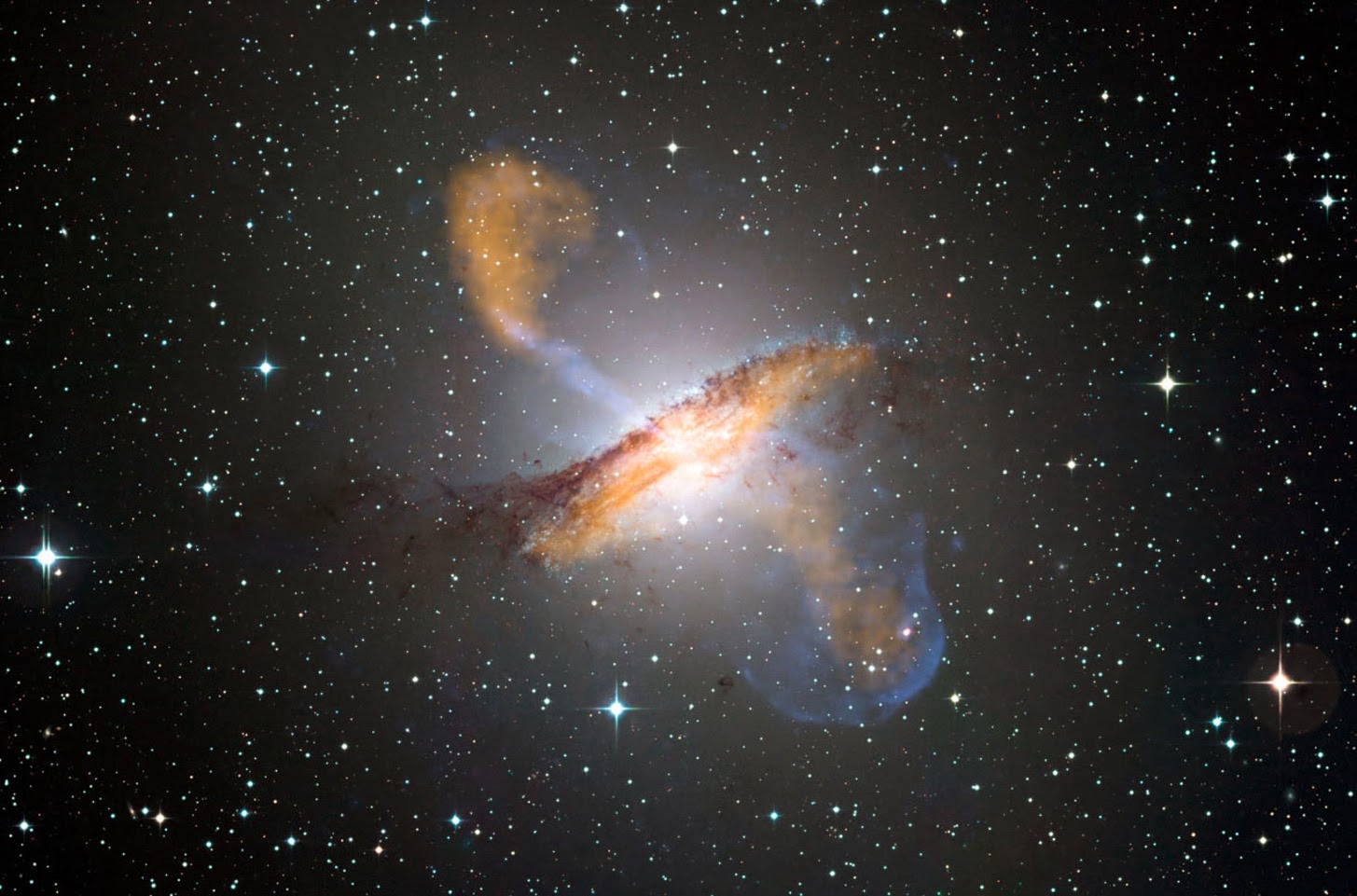

French architect Vincent Callebaut poses as he presents a picture of one of his futuristic projects on January 10, 2014 in Paris

Imagine stepping out of your highrise apartment into a sunny, plant-lined corridor, biting into an apple grown in the orchard on the fourth floor as you bid "good morning" to the farmer off to milk his cows on the fifth.

You take the lift to your office, passing the rice paddy and one of the many gardens housed in the glass edifice that not only heats and cools itself, but also captures rainwater and recirculates domestic waste as plant food.

No, this is not the setting for a futuristic movie about humans colonising a new planet.

It is the design of Belgian architect Vincent Callebaut for a 132-floor "urban farm" the answer, he believes, to a healthier, happier future for the estimated six billion people who will live in cities by 2050.

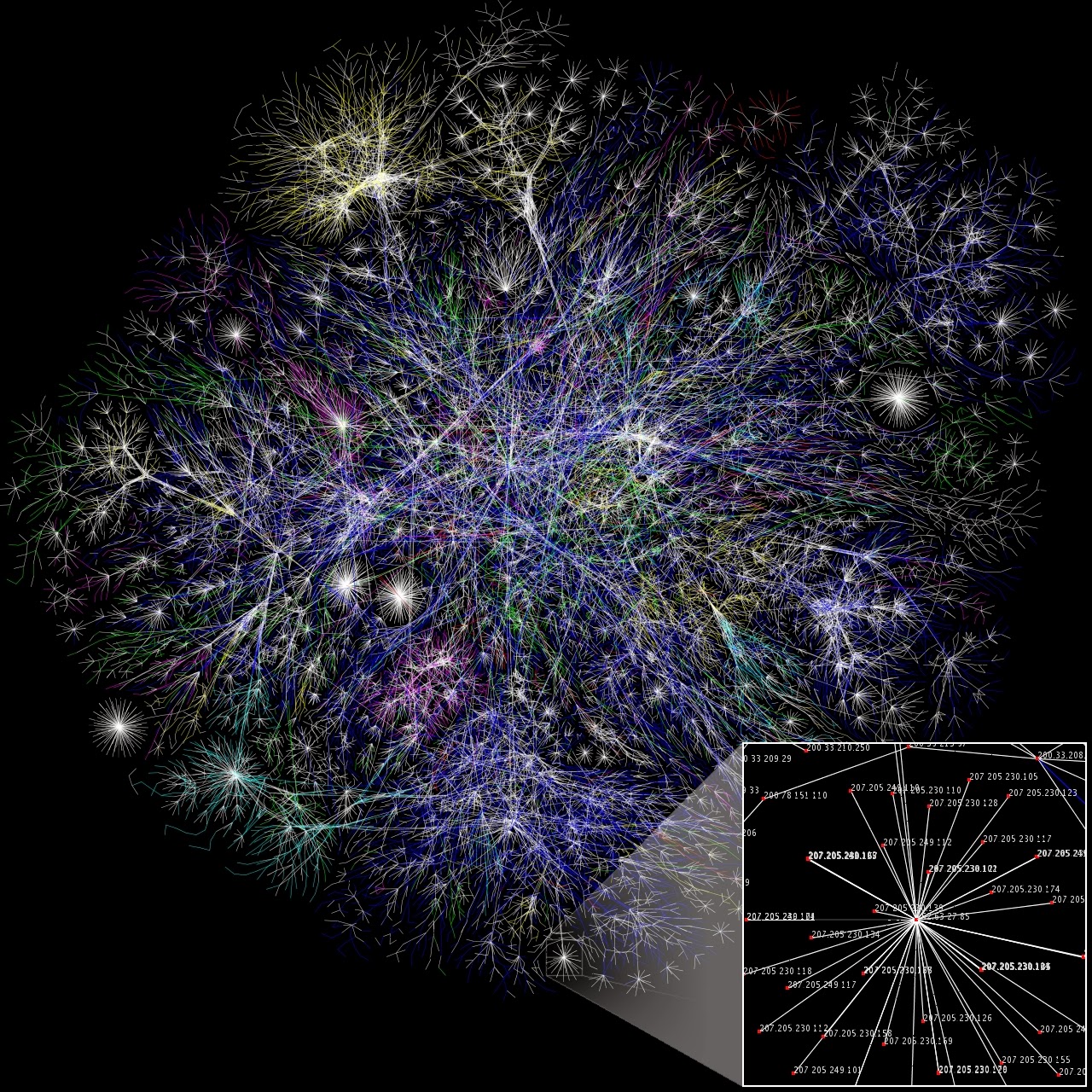

With food, water and energy sources dwindling, the city of the future will have to be a self-sufficient "living organism", said the 36-year-old designer of avant-garde buildings some critics have dismissed as daft or a blight on the landscape.

"We need to invent new ways of living in the future," Callebaut told AFP at the Paris studio where he plies his trade.

"The city of tomorrow will be dense, green and connected. The goal is to bring agriculture and nature back into the urban core so that by 2050. we have green, sustainable cities where humans live in balance with their environment."

Each building, he said, must ultimately be a "self-sufficient, mini-power station."

The quest for sustainable urban living has never been more urgent as people continue flocking to cities which encroach ever more onto valuable rural land, gobbling up scarce natural resources and making a disproportionate contribution to pollution and Earth-warming carbon emissions.

Enter Callebaut with his project "Dragonfly" a design for a massive, twin-towered, "vertical farm" on New York's Roosevelt Island.

French architect Vincent Callebaut poses in front of a picture of one of his futuristic projects on January 10, 2014 in Paris

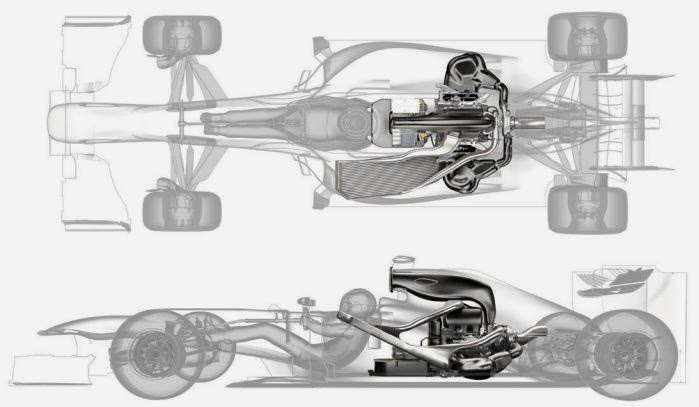

From each tower springs a large, glass-and-steel wing, so that the edifice resembles the insect after which it was named.

The draft structure includes areas for meat, dairy and egg production, orchards, meadows and rice fields along with offices and flats, gardens and public recreation spaces.

Energy is harvested from the Sun and wind, and hot air is trapped between the building "wings" to provide heating in winter. In summer, cooling is achieved through natural ventilation and transpiration from the abundant plant growth.

Plants grow on the exterior shell to filter rain water, which is captured and mixed with liquid waste from the towers, treated organically and used as fertiliser.

And at the base of the colossus: A floating market on the East River for the inhabitants to sell their organic produce.

"They made fun of me. They said I created a piece of science fiction," Callebaut says of his detractors.

But as awareness has grown of the plight of our planet, overpopulation and climate change, his ideas have gained traction, and the Dragonfly design has been exhibited at an international fair in China.

No buyers, but rising interest

Callebaut has also drafted a concept for a floating city resembling a lily pad that will house refugees forced from their homes by climate change.And he hopes to sell a design for a "farmscraper" in Shenzhen, China that will include housing, offices, leisure space and food gardens.

As yet, Callebaut has found no buyers for these big projects.

"With the recent economic recession, politicians and government may... have been reluctant to venture into such new, large-scale endeavours that have not been tested before," Emilia Plotka, a sustainability expert at the Royal Institute of Royal Architects, told AFP of Dragonfly and similar projects.

But she pointed out the concept has inspired other, smaller projects.

"Instead of majestically tall bionic towers plonked in riverbeds, vertical farms have been rather more modestly integrated into existing buildings, derelict industrial sites and floating barges," said Plotka.

One example is the Pasona Urban Farm a nine-storey office building in Tokyo that allows employees to grow their own food in specially reserved green spaces at work.

"Whilst the buy-in may not be as noticeable at the moment, it certainly is widespread and growing," said Plotka of the "vertical farm" movement.

"I suspect most other new vertical farms will remain hidden in disused urban spaces or existing business and domestic blocks, which is not bad at all as they will use fewer resources to be set up and enhance their surrounding environments and communities."

Phys.org

Now read: Grass the new biofuel